The story of today is written in the decisions of yesterday

The history of our remarkable world is written in the sometimes small and seemingly innocuous decisions made by individuals in the pursuit of their goals. These decisions have forged alliances, taken lives, broken hearts, created works of art, started wars, and ultimately, have radically reshaped the trajectory of human history.

Explore these decisions and how they created a dramatic shift in the fortunes of the society that trailed in their wake.

Feature Article

Recommended Reading

Ancient Sparta: The History of the Spartans

Ancient Sparta is one of the most well-known cities in Classical Greece. The Spartan society…

US History Timeline: The Dates of America’s Journey

When compared to other powerful nations such as France, Spain, and the United Kingdom, the…

iPhone History: Every Generation in Timeline Order 2007 – 2023

Every generation or two, a technological advancement is made that is so significant it radically…

The Mason-Dixon Line: What Is It? Where is it? Why is it Important?

The British men in the business of colonizing the North American continent were so sure…

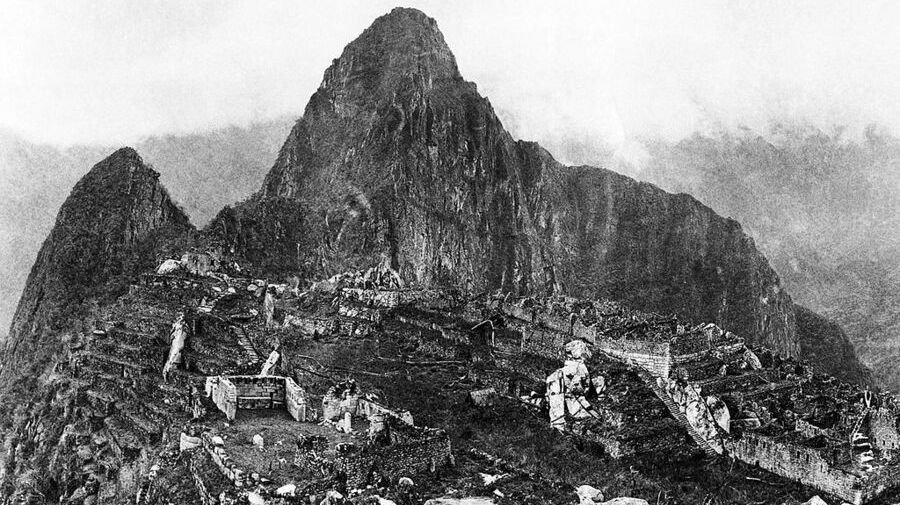

Ancient Civilizations Timeline: The Complete List from Aboriginals to Incans

Ancient civilizations continue to fascinate. Despite rising and falling hundreds if not thousands of years…

The Complete History of Social Media: A Timeline of the Invention of Online Networking

Social media has become an integral part of all of our lives. We use it…

“A people without knowledge of their past history, origin, and culture, is like a tree without roots.”

Marcus Garvey

Latest Articles

The Greek God Family Tree: A Complete Family Tree of All Greek Deities

The Greek god family tree is extremely complex. The standard lines drawn between generations often…

Who Invented the CNC Machine? The History of Computer Numerical Control (CNC) Machinery

The invention of CNC machinery revolutionized the manufacturing industry, enabling the automated production of complex…

Who Invented Water? History of the Water Molecule

In the vast expanse of the cosmos, amidst swirling galaxies and celestial wonders, one seemingly…

Who Invented Meth? The Surprising History Revealed

Methamphetamine, commonly referred to as meth, has a history marked by intricacies and evolution. While…

Explore a complete list of our latest articles here.

Explore Topics

BIOGRAPHIES

US HISTORY

GODS AND GODDESSES

GODS AND GODDESSES

ANCIENT HISTORY

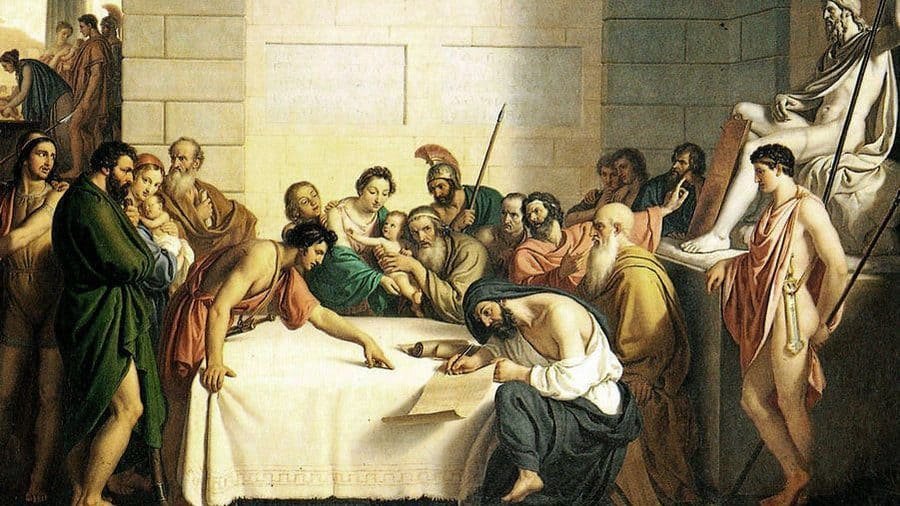

- The Cradle of Civilization: Mesopotamia and the First Civilizations

- Petronius Maximus

- Tiberius Gracchus

- Odysseus: Greek Hero of the Odyssey

HEALTH

Popular Articles

League of Nations: Purpose, WWI, and Failure

The League of Nations was an international organization founded on January 10, 1920, following the…

The Origin of Shepherd’s Pie: The Story of a Classic Irish Dish

A favorite comfort food in Irish pubs and homes, the humble shepherd’s pie – a…

Inventions by Women: Female Inventions That Changed the World

There are numerous inventions that we can thank women for. From treatments for malaria to…

Origin of Pickleball: Pickleball History

Pickleball is a unique blend of tennis, badminton, and table tennis, and has rapidly grown…

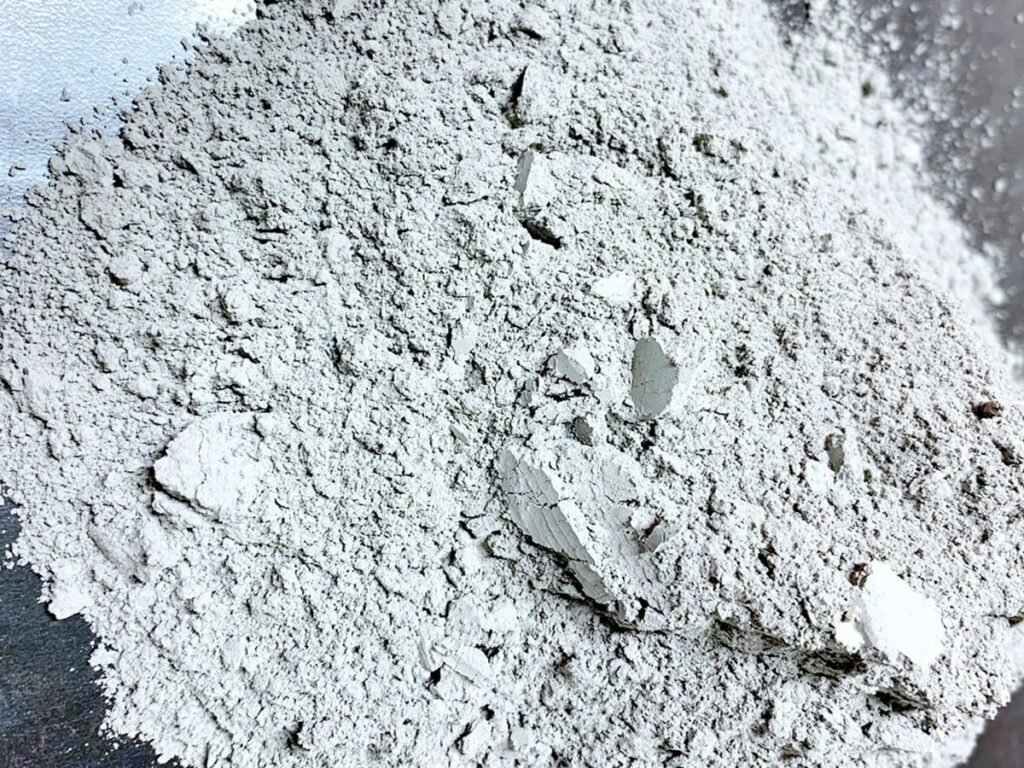

Who Invented Cement? History of Cement from Ancient Times to Today

Cement is a fundamental building material that has been shaping our world for centuries. Its…

Who Invented the Hoodie? Unraveling the Origins of an Icon

The story of who invented the hoodie unfolds a fascinating journey from practicality to fashion…